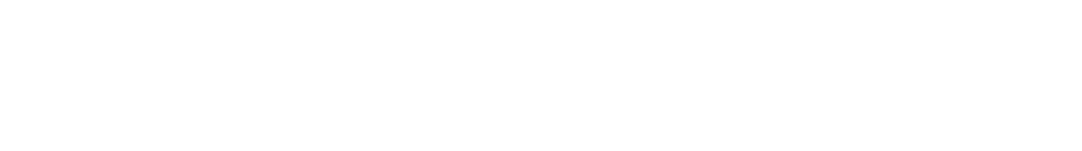

UNC computer scientist Ron Alterovitz in his lab. (photo by Donn Young)

If you haven’t come across a robot lately, it’s because they’re still not very good with people, says UNC computer scientist Ron Alterovitz. Humans are unpredictable — robots are limited by their programming. Humans are soft to the touch — robots are used to interacting with rigid materials. In the world of manufacturing, for example, robots do great at tasks involving wood or steel.

In Alterovitz’s lab, he and his team are teaching robots to work in the human world of variability and living tissue. Researchers don’t have to build whole machines from scratch — those already exist, Alterovitz says, and some of them are pretty impressive. Robots can wield a needle with precise control, or run, throw objects and carry things.

“We already have hardware that’s close to what we need,” Alterovitz says. “The challenge is, how do you actually program these robots so they can do something useful?”

He thinks of an elderly relative in an assisted-living center. “She had been this independent, spunky woman,” he says. “But the number of tasks that she couldn’t do by herself started increasing. One thing that was giving her trouble was putting on her compression stockings every day. I thought, why is it that we don’t have a robot that can help her do things like that?”

It wouldn’t make much sense to write a computer program just to have a robot help people put on their stockings. It would take a huge amount of code, and the result would be a robot extremely limited in its usefulness — what if you changed to a different type of hosiery?

Teaching robots human tasks

Instead, Alterovitz’s team writes code that allows a robot to be taught new tasks by ordinary people. You guide the robot’s limbs by hand through a task several times, and the robot notices what changes on each repetition and what stays the same.

“There are so many things that seem trivial to our minds,” Alterovitz says. “For example, at some young age we learned that you have to hold a plate of food level or all of it will slide off onto the floor.

“A robot knows nothing. You can try to program all these nuances, or you can create a method to teach a robot to perform skills and have the robot be able to do those things again in new environments.”

Alterovitz and grad students in his lab have been working with a robot called Nao (“now”), a little two-foot humanoid made by a French company called Aldebaran Robotics. They’ve taught Nao how to add sugar to tea and how to wipe down a table. These are small steps, Alterovitz says, on the road to more complicated tasks such as putting on stockings. The important thing his group has shared with other computer scientists is how to have a robot judge what’s important about a new task it’s learning, like keeping the spoon level or maneuvering around obstacles.

On the surface, little Nao looks like the most advanced technology in the Alterovitz lab. But another robot that looks like just a couple of rods and boxes may start helping people sooner than humanoid robots will. It’s a surgery robot that wields a flexible, bevel-tipped needle that Alterovitz and his collaborators patented. When the robot twists the needle’s base, the needle slides through tissue in a curving path governed by the direction of the slant on the needle’s head.

Robots’ use in surgical procedures

Human surgeons with regular needles are limited to pretty much a straight-shot path when they’re operating. This means a lot of places in the body are hard for them to reach without damaging other organs. The prostate gland, for example, is a difficult target, and when a patient has prostate cancer, a common treatment is radiation seed therapy, in which a doctor has to place tiny doses of radiation precisely on the gland to damage the cancer while hurting as little of the surrounding tissue as possible.

Studies have showed that experienced physicians frequently misplace the radiation therapy. “Patients may end up with these seeds giving a high dose of radiation to healthy tissue, and the actual cancerous tissue isn’t getting enough of a dose,” Alterovitz says. “That can lead to reoccurrence and to side effects on the healthy tissue.”

The robot, on the other hand, can analyze medical images such as ultrasounds to figure out the safest path around organs, predicting how tissues will shift in response to a needle. It can also use the bevel-tipped needle, which is hard for a human hand to wield because our brains can’t easily predict the curved path the needle will travel as it turns.

The Alterovitz lab has tested its medical robot on animal organs, but mostly it practices with tissue phantoms — gels that bend like animal and human tissue. They place obstacles in the tissue, and the robot figures out how to get a needle around them to the target. The robot is good at predicting how much and where the tissue will move in response to the needle.

There are a lot of medical procedures that need the same kind of help, Alterovitz says, such as removing a tumor near the surface of a lung. If surgeons go through the chest, they might disturb the pressure of the lung and collapse it accidentally. A robotic, curving needle could reach any point in the lung by going through the patient’s mouth.

Surgical robots won’t be outright replacing humans next to the operating table, Alterovitz says. But part of the point of robot-assisted surgery is to create a good digital replica of the patient. An accurate 3-D model, enhanced with information about the weight and resistance of each type of tissue, lets a robot, or a human, practice surgeries ahead of time.

“No one wants to be the first patient someone operates on,” Alterovitz says. “We want to let physicians realistically experience what a surgery will be like before they perform it.”

Alterovitz is an assistant professor of computer science in the College of Arts and Sciences. His assistive robotics work, conducted with computer science grad students Gu Ye, Chris Bowen and Jeff Ichnowski, is funded in part by the National Science Foundation and is a collaboration with the Division of Occupational Science and Occupational Therapy in the UNC School of Medicine. The needle steering project, funded by the National Institutes of Health, is also the work of computer science grad students Sachin Patil and Luis Torres, and is a collaborative effort with the UNC School of Medicine, Vanderbilt University, Johns Hopkins University and the University of California, Berkeley. To learn more, visit robotics.cs.unc.edu.

[By Susan Hardy, a writer at Endeavors magazine. This story appeared in the fall 2012 Carolina Arts & Sciences magazine.]

Published in the Fall 2012 issue | Features

Read More

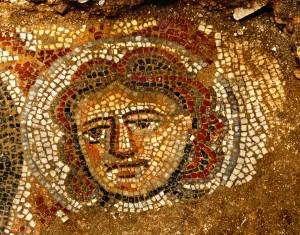

Monumental synagogue building discovered in excavations in Galilee

A monumental synagogue building dating to the Late Roman and…

The Future of the Outer Banks: Climate change’s effect on N.C.’s barrier islands

Laura Moore uses historical maps, geologic data and computational modeling…