For our feature on AI in teaching and research, we thought it fitting to use AI-generated artwork. (illustration credit: generated with AI by X-Poser, Adobe Stock Library.)

Artificial intelligence has been around for decades, but it is now more accessible and powerful than ever. While AI presents copious challenges, many UNC students and researchers are embracing it in their work.

While smartphones, smartwatches, smart appliances — even smart pillows — are a product of the 21st century, the concept of artificial intelligence has been around since antiquity.

In Greek mythology, the bronze giant Talos was an autonomous defender of the island of Crete — circling the perimeter three times a day and throwing boulders at invaders. An ancient Buddhist tale tells the story of a seductive female robot known as yantraputraka, meaning “mechanical girl.” Sacred statues in Egypt were believed to be imbued with real minds and could answer any questions put to them.

These days, we have Google.

From smart home devices and editing software to fraud detection and search engines, artificial intelligence has proven pervasive in everyday life.

Streaming services, businesses and social media platforms use it to track user behavior to provide personalized content and advertisements. Health apps analyze biometric data to give insights for a fit lifestyle. Navigation systems use real-time and historical traffic patterns and predictive analytics to provide route recommendations.

What is different than in years past is the surge in the amount of data, computational power and the public release of generative AI platforms like ChatGPT or Microsoft Copilot. In the world of artificial intelligence, the more data, the more robust these models and algorithms become.

This has led to an exponential increase in the ways in which this technology could be used. For some, ethical issues loom just as large — problems with data privacy, structural bias, copyright, plagiarism and disinformation are all hot topics in the AI ethical debate.

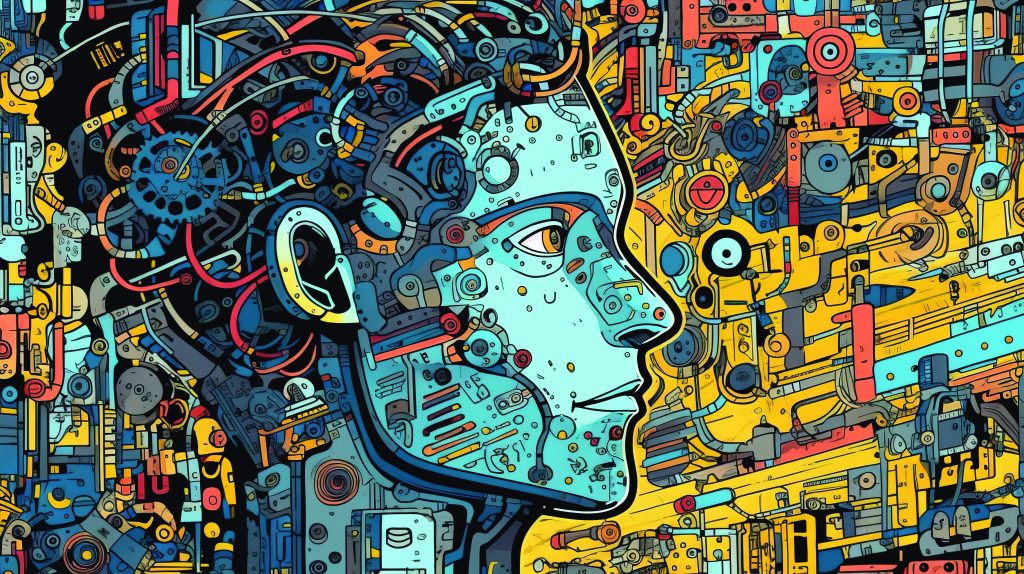

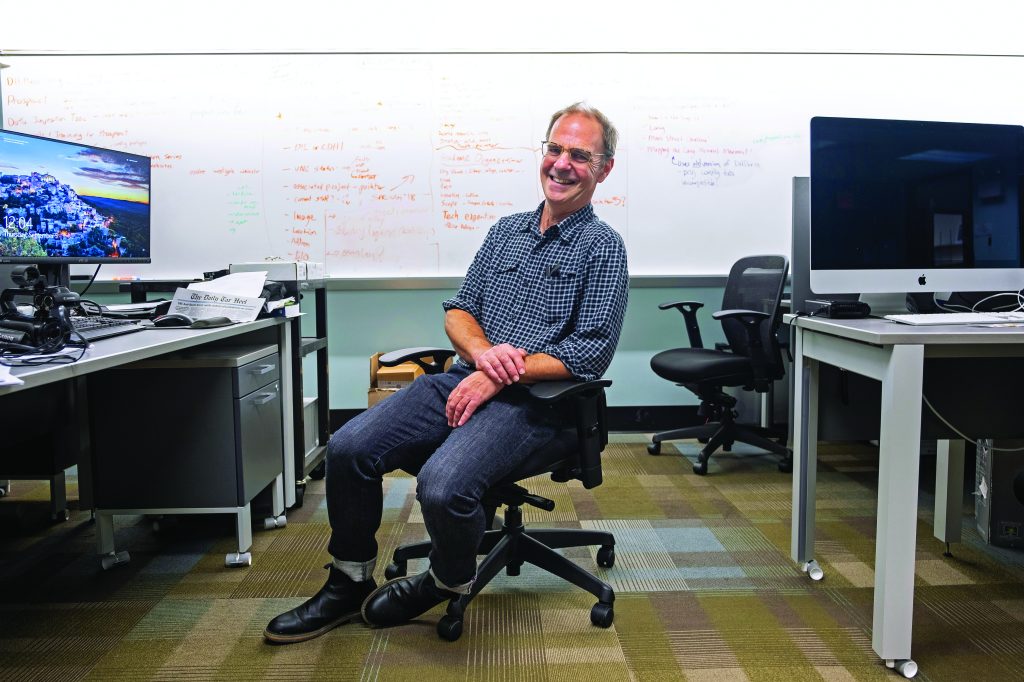

While some view the rise of AI as a threat, others see its potential in learning. Daniel Anderson, professor in the department of English and comparative literature and director of Carolina Digital Humanities/Digital Innovation Lab and the UNC Writing Program, is of the latter. In his research, Anderson studies the intersection of computers and writing.

Throughout history, technology has constantly changed the ways in which we view communication and the world around us.

“Obviously inventions like the printing press or computer were huge,” Anderson said. “But even look at the pencil eraser — that created lots of kerfuffle because writing was no longer permanent. People thought we were going to get stupid because we can make mistakes and erase.”

But each time technology caused a disruption, society learned to adapt, said Anderson.

“I think one thing that’s changed is the pace of artificial intelligence, and maybe that’s scarier,” he said.

Every first-year UNC student is required to take “English Composition and Rhetoric” as an introduction to collegiate-level writing. Last summer, Anderson saw this as an opportunity to explore ways students can incorporate artificial intelligence into their writing. Students used generative AI platforms throughout the course and found mixed results. When brainstorming topic ideas, identifying key words or summarizing verified documents, AI was helpful. Past that, things got messy. For example, when looking for a literature review with the best 10 sources on a topic, the chatbot would generate false references. Inaccurate information presented as fact is called an AI hallucination.

These hallucinations, sometimes humorous, taught students a valuable lesson in verification.

“A lot of what I hoped to see, as a teacher, happened,” said Anderson. “By spending time with these activities, students were able to have the lightbulb go off for themselves.”

Based on this experience, and with funding from the UNC School of Data Science and Society, Anderson built training modules as part of the Carolina AI Literacy initiative to help students learn how to use these platforms responsibly and effectively.

Anderson’s goal is to ensure that all UNC students become AI literate.

“Not all students need a deep understanding of AI, but they should know it’s trained on data and that data can come with limitations and affordances,” he said. “Your bank is going to be using AI; your doctor is going to be using it. It’s useful to have a sense of how this technology is mediating your life.”

Filling in the gaps

Some are just beginning their AI journey, but others have been traveling this road for years, like Junier Oliva, an assistant professor with appointments in both the College’s department of computer science and the School of Data Science and Society.

Oliva’s research focuses on improving machine learning models, a type of AI that learns from data in order to make predictions. Machine learning is effective when using data that follows the same patterns seen during training. But when a model confronts categorically different or missing data, inaccuracies can occur.

“We want these models to work across a broad range of potential areas,” he said. “This gives us a lot of opportunities to help solve really interesting and important problems.”

– Junier Oliva

A project with Alexander Tropsha, a professor in the UNC Eshelman School of Pharmacy with an adjunct appointment in computer science, aims to improve machine learning capabilities beyond the scope of training datasets. One application for this is in drug discovery.

“When we think about ‘discovery,’ we’re thinking about characterizing things that are different than what’s been previously characterized,” Oliva said. “But since machine learning models aren’t very good at characterizing data that’s very different than what’s been previously seen, these two things are at odds.”

Currently, machine learning is used to flag molecules with potential in drug development. This is followed by experimentation, but if the models are inaccurate, lots of time and money is wasted on testing.

The duo’s three-year National Science Foundation project is developing methods that can better recognize these machine-learning limitations. By creating trials to assess how well a model can extrapolate beyond the scope of its training, they can then use this information to flag predictions that are likely unreliable. They are also building methods that will tell researchers what additional data would improve the model’s training.

Another of Oliva’s projects is with Sean Sylvia at the Gillings School of Global Public Health. It develops models to assist human reasoning — what can be described as human-AI collaboration.

“There are many sensitive application areas where we still want a human in the loop,” Oliva said. “For example, health care: We still want the doctor or clinical provider to ultimately be responsible for treatment. Ideally, we want to create a system where the human plus AI are working together better than either could in isolation.”

Think of patient records. In an ideal world, doctors can read these histories before the patient appointment. In reality, records can be long and complex, and doctors’ time is limited.

Focusing on colorectal cancer screening and treatment, Oliva and Sylvia are creating a system that can review patient records and distill core components. This can then be combined with machine-learning predictions and explanations drawn from ancillary data to make recommendations.

Although both projects are rooted in health, that’s just one application for Oliva’s work. Ideally, it could be used in a variety of time-sensitive situations or where data is incomplete, like defense, search and rescue, interactive retrieval, autonomous vehicle development and public policy, just to name a few.

“We want these models to work across a broad range of potential areas,” he said. “This gives us a lot of opportunities to help solve really interesting and important problems.”

Answering the “why”

Looking at Corbin Jones’ bio, it may be difficult to nail down his research interests. Ecuadorian grasses, the coronavirus, microbes and fruit flies have all been subjects in his lab.

“But you can link them all,” Jones said. “The big question I’m interested in is the evolution of novelty — how do little differences in DNA make us distinct, and how do new genes form?”

As a professor in both the College’s department of biology and the department of genetics and Integrative Program for Biological and Genome Sciences in the UNC School of Medicine, Jones investigates histones and their role in gene expression.

DNA is a huge molecule: If the DNA in all your cells were unwound and strung together it would be twice the diameter of the solar system. Histones are proteins that help package this massive amount of DNA into a highly compact form.

“Your DNA is all wrapped up and packaged, but you can’t get in there to use it unless it’s opened. This histone code determines how accessible your DNA is to be used as that genetic blueprint,” he explained.

A deeper understanding of this process would give a fuller picture of how human genetics work and advance research surrounding genetic engineering and genetic-based diseases, like cancer.

In 2022, Jones — with collaborators from biology, the Renaissance Computing Institute and the schools of Data Science and Society, Medicine and Pharmacy — won the Vice Chancellor for Research’s Creativity Hub Award and are using AI to crack this code.

Artificial neural networks are a common form of machine learning, based on the principles of brain development — new connections are made among neurons from new experiences and learning. Typically used in filtering and categorizing large amounts of data and making predictions, artificial neural networks are less useful in explaining why those conclusions were reached.

“AI is basically a black box,” Jones said. “You shove a bunch of stuff in there and it makes a prediction. But one of the biggest problems with AI is you don’t know why it’s making that prediction, and that’s where it can get into problems of bias.”

By developing visible neural networks, researchers can better understand the path taken to those outcomes. They can then use that information to design experiments investigating how the molecular mechanism actually works.

“We are interested in how biology stores and manipulates information to inspire new ways of building AI tools,” he said. “To me, AI is the next wave of tools that we can apply to our work.”

All in on video

In 2023, internet users dedicated an average of 17 hours per week to online video consumption. Every day, 720,000 hours of video are uploaded to YouTube — the equivalent of 82 years. TikTok alone has just under 2 billion users. Now more than ever, we see the world through a camera.

“Video plays a really significant role in our lives,” said Gedas Bertasius, assistant professor in the department of computer science. His work is focused on video understanding and computer vision technologies.

Video understanding is the process in which AI is trained to comprehend video through its visual, audio and textual elements — something we as humans take for granted.

“This is something we don’t even need to think about when we watch a video,” Bertasius said. “But when AI is given a video, what it sees is just a bunch of pixels or waveforms — and it has to make sense of these.”

Improving the ways in which AI understands video advances its video retrieval and generative capabilities. It also helps lay the foundation for first-person vision technologies. A subfield of computer vision, first-person vision uses wearable cameras — installed in eyeglasses, for example — to record and analyze video throughout a task.

“Most of our research is about machine learning, but I’m very interested in human learning as well,” he said. “So how can we use machine learning to make human learning more effective? The long-term vision is to develop some sort of personalized AI assistant.”

One concept is people could wear augmented reality glasses to help with daily tasks or acquire skills. Cooking a new dish? The AI assistant could remind you to use olive oil instead of butter. Learning to play tennis? The AI coach could give advice on adjusting hand positioning.

“I’m excited to see this technology make people’s lives better in a lot of different ways.”

– Gedas Bertasius

A project that is a collaboration among 14 universities and the Meta Fundamental Artificial Intelligence Research team is examining the perception side of this technology and analyzing skilled tasks to translate expert knowledge to beginners. With over 800 participants, the Ego-Exo4D project combined data analysis from wearable and bystander camera views with feedback from skilled experts for a variety of activities like sports, music and dance.

This approach teaches AI how to understand complex activities in a way similar to our natural perception.

“I’m excited to see this technology make people’s lives better in a lot of different ways,” Bertasius said.

Another researcher harnessing AI video analysis is Adam Kiefer, assistant professor in the department of exercise and sport science, core faculty member in the Matthew Gfeller Center and co-director of the STAR Heel Performance Lab.

Kiefer’s research uses AI to predict athlete performance, injury prevention and recovery.

One project, funded by the National Institutes of Health, built an extended reality assessment platform to analyze athlete behavior and customize training for prevention of musculoskeletal injuries.

During trials, an athlete is fitted with a wireless virtual reality headset that can simulate a sport-like scenario. The athlete then runs though a drill, like running toward a goal while avoiding a virtual defender. Using this platform, body-worn sensors and cameras on the sideline, researchers gather a slew of movement pattern data, including muscle activation and eye tracking.

“We can feed that data into our virtual defenders in real-time so they know, for example, there’s an 80% chance that this athlete is going to go to the right of the defender, so shift over and take that away,” Kiefer said.

Thus far, the team has been focused on building the platform, called Automated Digital Assessment and Training (ADAPT). The next phase includes follow up studies with patient populations to test its effectiveness as an intervention in injury recovery.

“I think we’re in a really unique period where — with the right theoretical approach and data — AI can help us better understand some of these long-standing questions.”

– Adam Kiefer

A spinoff of this project dives deeper into what may seem like science fiction. Inspired by ways to improve virtual defenders, Kiefer’s team is working on creating “digital twins” — digital models of people to be used in simulations and training. By building an in-depth library of data about an individual, paired with predictive analytics and genetic algorithms, they hope to create digital twins that could be used to predict performance of not only athletes, but also service members, students or workers learning a new skill, for example.

“If we can predict performance, then we can also do a better job of preparing them to be more adaptable and resilient,” he said.

For Kiefer, AI’s ability to code and analyze vast amounts of data frees up time for innovative endeavors like this.

“I think we’re in a really unique period where — with the right theoretical approach and data — AI can help us better understand some of these long-standing questions,” Kiefer said. “If we were to have this conversation three years from now, I think it’s going to look completely different, too, and I’m looking forward to going on that journey.”

By Megan May

Published in the Spring 2024 issue | Features

Read More

Celebrating 10 years of hip-hop cultural diplomacy program

Mark Katz, the John P. Barker Distinguished Professor of Music,…

Parker receives Jefferson Award, one of UNC’s highest honors

Institute for the Arts and Humanities Director Patricia Parker accepted…

If these walls could sing

Scott Nurkin celebrates North Carolina’s contributions to the American songbook…